20. Sensors

20.1. Camera Sensor

Note

This is an experimental feature meaning it may be changed in the future with no guaranteed backwards compatibility.

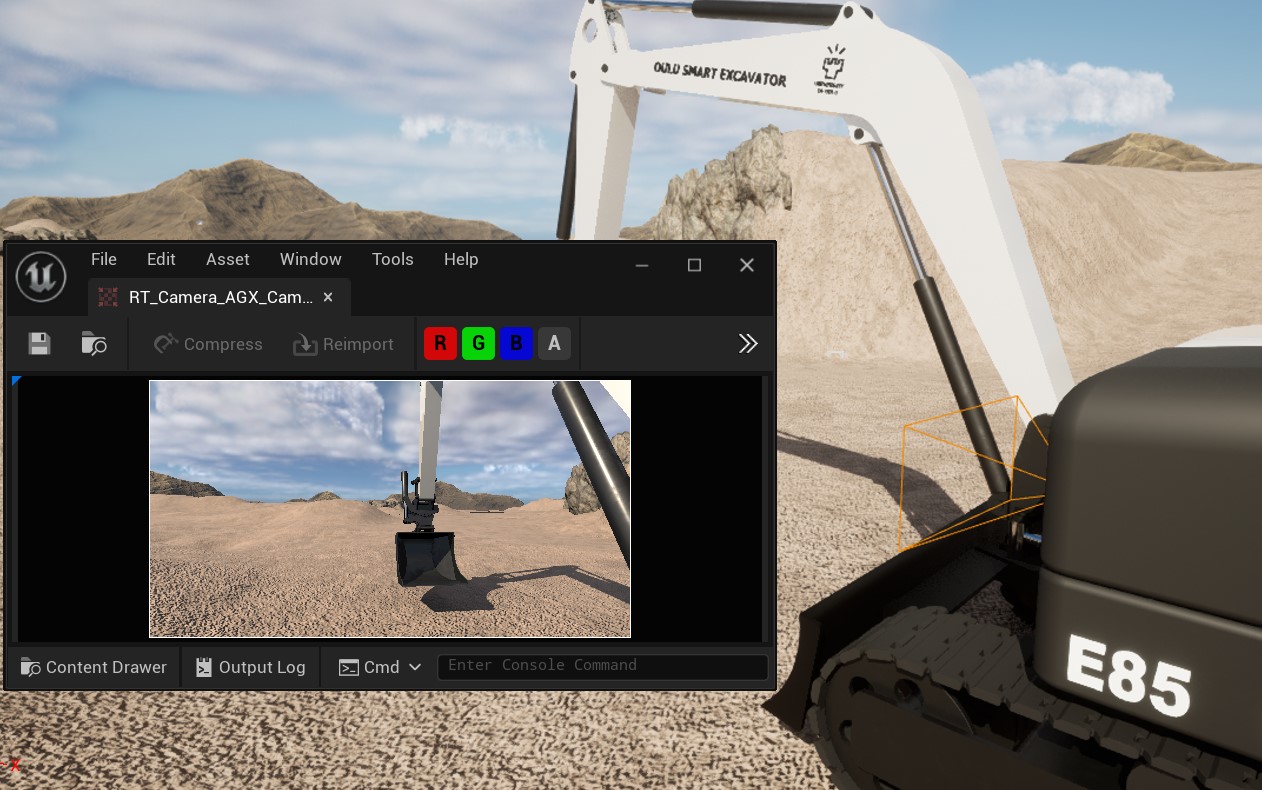

Camera Sensor on an excavator.

AGX Dynamics for Unreal provides a Camera Sensor Component that can be used to capture the scene from that camera’s point of view. It also provides convenience functions for getting the image data either as an array of pixels (RGB) or as a ROS2 sensor_msgs::Image message. See Getting Image Data for more details. The image data is encoded with sRGB gamme curve.

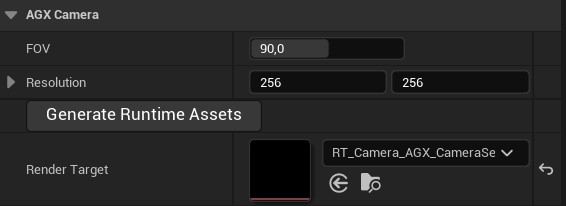

A field of view (FOV) and Resolution can be specified from the Details Panel:

Specifying FOV and Resolution from the Details Panel.

The Camera Sensor Component renders the image information to a Render Target which must be assigned to the Component.

To make this simple, a button labeled Generate Runtime Assets is available in the Details Panel as can be seen in the image above.

It will generate a Render Target with the appropriate settings according to the specified Resolution.

This also means that if this parameter is changed, the Generate Runtime Assets procedure must be done again.

If an old Render Target is currently assigned when the Generate Runtime Assets procedure is performed, it will be updated and no new Render Target will be created.

Changing the FOV can be done during Play either from the Details Panel or by calling the function SetFOV.

To attach the Camera Sensor to a simulated machine, make it a child to the Scene Component it should follow.

20.1.1. Getting Image Data

Getting image data from the Camera Sensor Component can be done in two main ways; synchronously or asynchronously. The synchronous functions are GetImagePixels and GetImageROS2 which will return the captured image either as an array of pixels (RGB) or as a ROS2 sensor_msgs::Image message. These functions does some synchronization between the game thread and the render thread in order to return the image to the caller and can therefore be very slow.

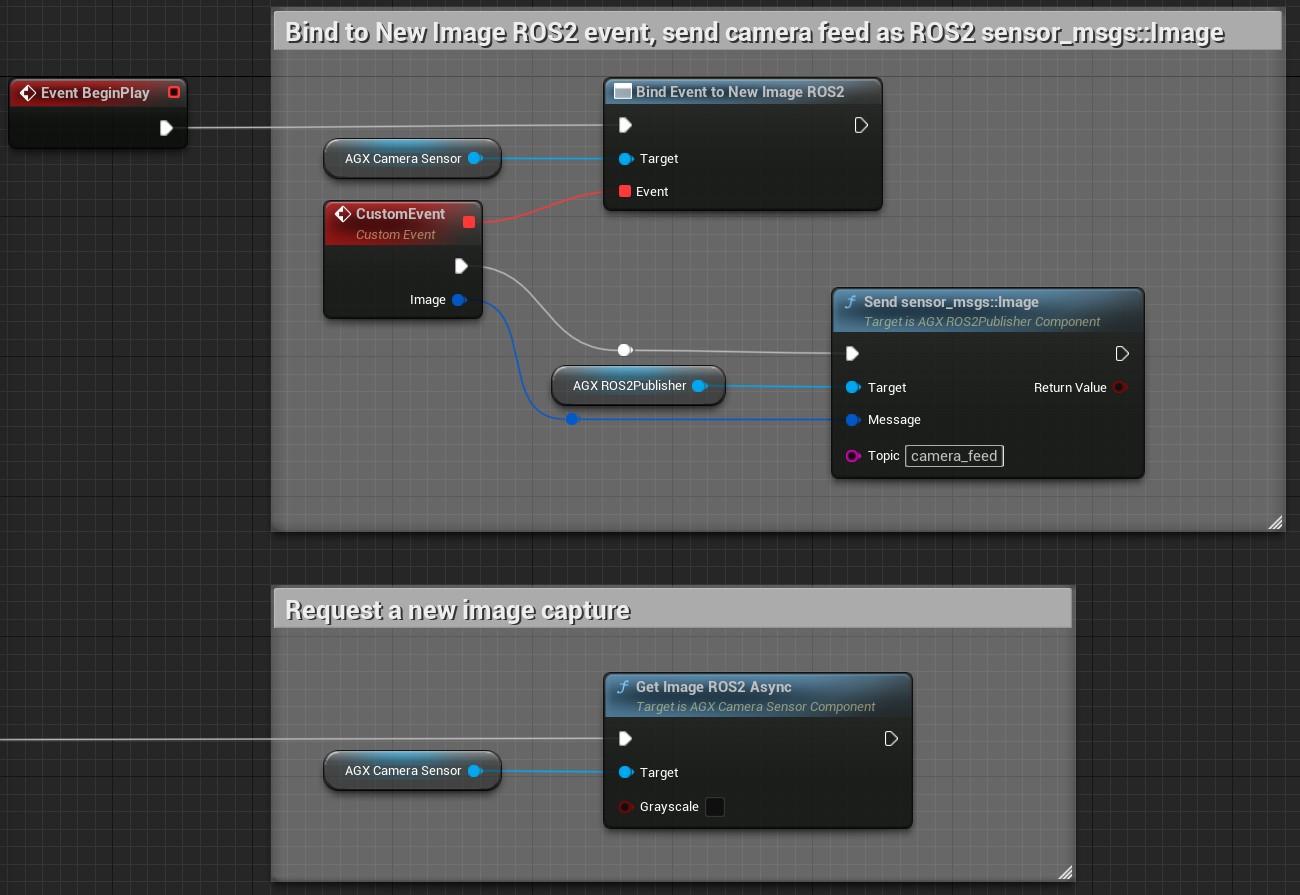

The recommended approach is using the asynchronous versions of these, namely GetImagePixelsAsync and GetImageROS2Async. These will return immediately and whenever the image is ready an Event, or delegate, will be triggered and the image is passed to anyone listening to the Event. The workflow then is to bind to the Event, for example in BeginPlay, and then during runtime call the async function whenever a new image should be captured.

In the examples below, both getting the image as pixels as well as a sensor_msgs::Image ROS2 message are shown. In the latter example, the image is also sent via ROS2 using a ROS2 Publisher. See ROS2 for more details.

Getting image as an array of pixels.

Getting image as a ROS2 sensor_msgs::Image message, and sending it via ROS2.

To do the same thing in C++, simply bind do the delegate:

CameraSensor->NewImagePixels.AddDynamic(this, &UMyClass::MyFunction);

Here, MyFunction must be a UFUNCTION taking a single const TArray<FColor>& parameter.

20.1.2. Limitations

The Resolution of the Camera Sensor Component cannot be changed during Play.

20.2. Lidar Sensor Line Trace

Note

This is an experimental feature meaning it may be changed in the future with no guaranteed backwards compatibility.

Lidar scanner attached to a wheel loader.

The Lidar Sensor Line Trace Component makes it possible to generate point cloud data in real-time.

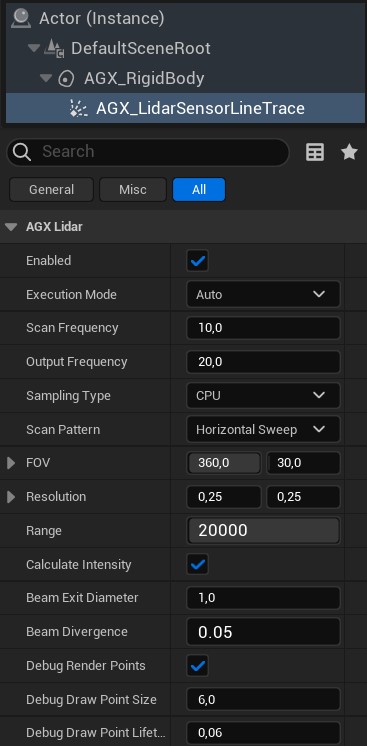

Lidar Sensor’s details panel.

The Lidar Sensor can be run in one of two Execution Modes: Auto or Manual.

In mode Auto, the Lidar Sensor will perform partial scans in each Simulation tick in a pace determined by the selected Scan Frequency.

The Scan Frequency determines how often a complete scan cycle is scanned, i.e. how often the entire Scan Pattern is covered.

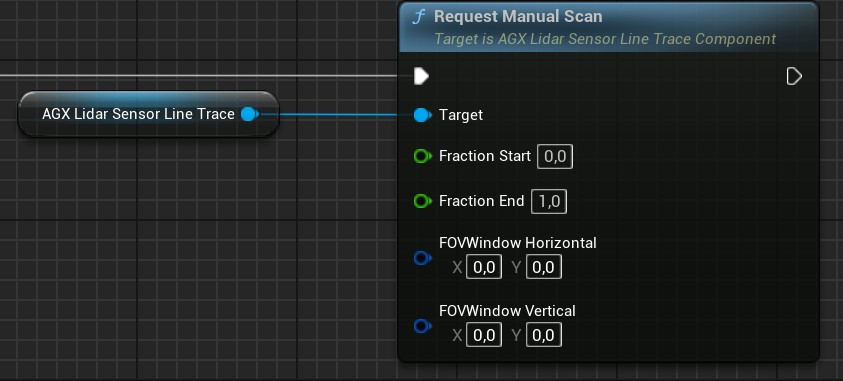

In mode Manual, the Lidar Sensor does nothing unless the RequestManualScan function is called, see image below.

Manual scan request in Blueprint.

Here, Fraction Start and Fraction End determine at what fraction of the total scan pattern the scan should start and end respectively.

FOVWindow Horizontal and FOVWindow Vertical can be used to mask a part of the scan pattern so that any point outside this Field of View (FOV) Window is disregarded.

The Output Frequency determines how often the Lidar Sensor outputs point cloud data, see Getting Point Cloud Data for more details.

This is only used with Execution Mode Auto.

The Scan Pattern determines what points are scanned within the selected Field of View (FOV) and in what order.

For example, with the Horizontal Sweep Scan Pattern, all points along the first vertical line are scanned in succession before moving to the next vertical line.

FOV determines the Field of View of the Lidar Sensor, in degrees, along the vertical and horizontal directions respectively. The Resolution determines the minimum angular distance between two neighbouring laser rays in degrees.

The Range determines the maximum detection distance for the Lidar Sensor in centimeters. Any object further away than this distance will not be detected by the Lidar Sensor.

The Calculate Intensity option determines whether an intensity value for each Laser ray should be approximated or not. If not, the corresponding intensity value for all rays will be set to zero. To calculate the intensity, the angle of incident, material roughness parameter of the object being hit, as well as the distance is taken into account. The intensity dropoff over distance is a function of the Beam Exit Diameter and Beam Divergence that can be set for each Lidar Sensor instance.

To make a primitive AGX Shape not detectable by the Lidar Sensor, select an Additional Unreal Collision setting for that Shape that does not include query.

See Additional Unreal Collisions for more details.

To attach the Lidar Sensor to a simulated machine, make it a child to the Scene Component it should follow.

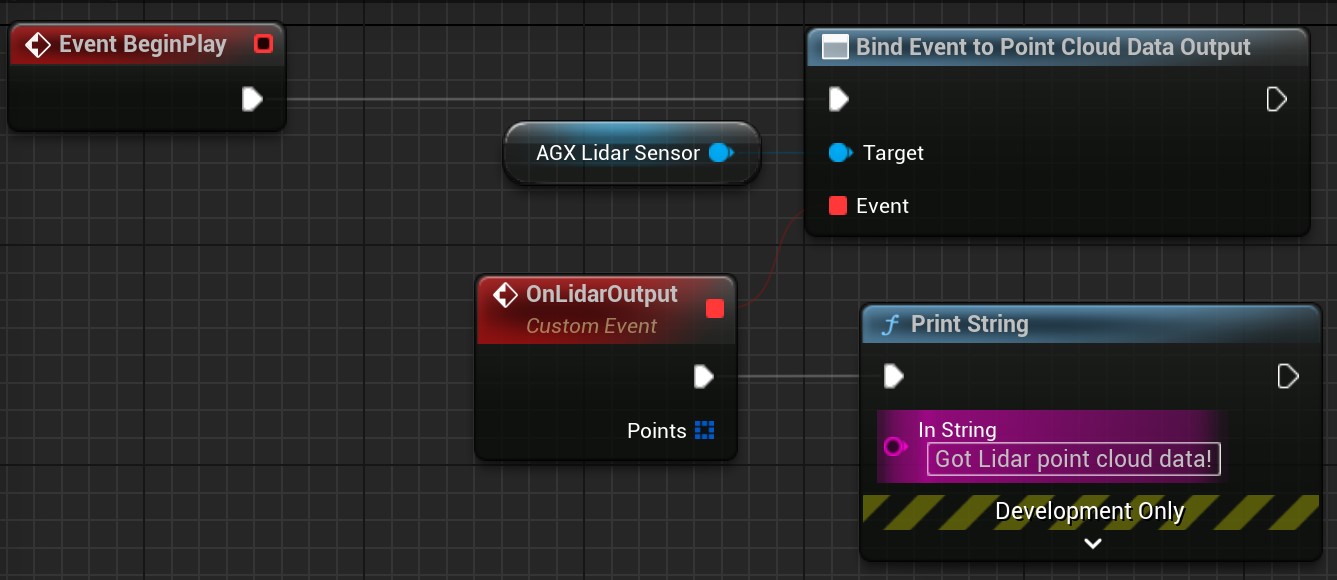

20.2.1. Getting Point Cloud Data

The way the Lidar Sensor outputs its point cloud data is via the delegate Point Cloud Data Output.

This is used in both Execution Mode Auto and Manual.

To access the point cloud data output, the user must bind to this delegate.

An example of this in Blueprint is shown below:

Accessing point cloud data in Blueprint.

To do the same thing in C++, simply bind do the delegate:

LidarSensor->PointCloudDataOutput.AddDynamic(this, &UMyClass::MyFunction);

Here, MyFunction must be a UFUNCTION taking a single const TArray<FAGX_LidarScanPoint>& parameter.

Note that in Execution Mode Auto, it is the Output Frequency property of the Lidar Sensor that determines how often this delegate is executed.

In Execution Mode Manual, this delegate is always called once upon calling the RequestManualScan function, as long as valid input parameters are given.

The point cloud data returned by the Point Cloud Data Output delegate is such that any laser ray miss is represented by a FAGX_LidarScanPoint with the IsValid property set to false.

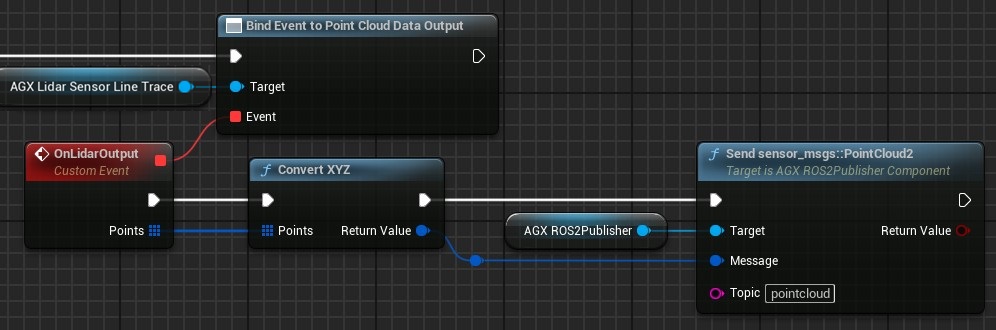

20.2.1.1. ROS2 sensor_msgs::PointCloud2

A convenience function Convert XYZ is available that takes as input an array of FAGX_LidarScanPoint and converts it to a ROS2 message sensor_msgs::PointCloud2. This can then be send via ROS2 using a ROS2 publisher, see ROS2 for more details about ROS2 in AGX Dynamics for Unreal.

Converting point cloud data to a ROS2 message and sending it via ROS2.

The Convert XYZ function ignores any laser ray miss, i.e. it only includes valid points.

Each point is serialized and stored in the sensor_msgs::PointCloud2 Data member as an X, Y, Z and Intensity value, 8 bytes each, i.e. 32 bytes per point.

The timestamp written to the Header member of the sensor_msgs::PointCloud2 message is set to the timestamp of the first valid point in the given array, even if other points have been generated at later timestamps.

An alternative version to the Convert XYZ is the convenience function Convert Angles TOF, available from Blueprint and C++, which represents each point as two angles and time-of-flight.

20.2.2. Limitations

With the current implementation, Terrain height changes as well as Terrain particles are not detected by the Lidar Sensor Component. Providing this functionality is currently in the process of being developed.